CS4610: Lecture 6 - Sensors for Mobile Robots

Sensor Characteristics

- proprioceptive sensors measure information internal to the robot, including the relative motion and forces between robot parts, temperatures, electrical voltages and currents, etc.

- exteroceptive sensors measure information about the external environment relative to the robot, such as the distance from the robot to another object

- we have already discussed proprioceptive sensors, in particular shaft rotation sensors, in some detail; today we’ll focus on exteroception

- passive sensors rely on incident energy (e.g. camera, compass)

- active sensors emit their own energy and then measure its effects (e.g. laser rangefinder, sonar)

- resolution measures how finely a sensor can discern changes, typically either in the sensing domain (e.g. the minimum difference in temperature detectable by a thermometer) or spatially (e.g. for a camera, resolution usually refers to the number of pixels)

- bandwidth essentially refers to temporal resolution, i.e. the maximum (and sometimes minimum) detectable rate of change

- accuracy refers to the fidelity of a sensor to actually report the true value of the sensed quantity; in most cases it would be difficult for a sensor with low resolution to have high accuracy, but it is entirely possible to have high resolution but low accuracy

- repeatability refers to the ability of the sensor to predictably return the same measurement in the same physical situation; a sensor with good resolution and repability can usually be calibrated to improve its accuracy

- aliasing is a behavior where the sensor can report the same measurement for more than one physical situation; for example, the IR sensors we’ll use report low values both for very near and very far objects

Bumpers and Tactile Sensors

- possibly the simplest type of exteroceptive sensor is a switch that is triggered when a robot makes contact with an object in the environment

- many arrangments and variations are possible; e.g. there could be an array of touch switches, a tube surrounding the robot whose internal air pressure increases when contact is made, whiskers, etc.

- the iRobot roomba and its eductational version create have semicircular bumpers with switches

- while switches are binary devices (on or off), there are also many types of sensor which can detect a range of contact forces

- for example, FlexiForce sensors detect pressure

- there is much more to what we typically consider our sense of “touch” unidirectional pressure sensing; the robot Obrero, developed at MIT by Eduardo Torres-Jara, is one example of a research project with a more advanced type of tactile sensor

Range Sensors

- it will clearly be helpful to detect nearby objects without actually colliding

- sonar sensors emit pulses of sound and measure the echo time

- distance to the object is thus

where

where  is the speed of sound in air

is the speed of sound in air - usually high-frequency sound is used to minimize annoyance, but there is still typically audible clicking at the pulse rate

- multiple sonars operating near each other usually need to be sequenced to minimize interference

- environmental sound and unwanted reflections can also cause problems

- infrared sensors, as we will use in lab, often operate on a triangulation principle

- an IR emitter sends a narrow focused beam out perpendicular to the sensor

- let

be the distance from the sensor to an object in the environment

be the distance from the sensor to an object in the environment - some of the reflected light is collected by a lens with focal length

adjacent to the emitter at a fixed baseline distance

adjacent to the emitter at a fixed baseline distance

- the focused light its a position sensitive device (PSD) which reports its horizontal location as

- by similar triangles,

- thus

- diagram TBD

- laser scanners like those from Sick and Hokuyo are currently popular, but much more expensive than the above range sensors (often 100x to 1000x the price)

- the original concept of a laser scanner is based on time-of-flight of a laser light pulse

- in practice, many commercial implementations do not measure time of flight (which would require expensive very high speed electronics), but use approaches based on different types of interferometry

- for example, the emitted light could be amplitude modulated at e.g.

MHz, yeilding a wavelength of

MHz, yeilding a wavelength of

m where

m where  m/s is the speed of light in air

m/s is the speed of light in air - the phase difference

between the emitted and returned light is proportional to the distance

between the emitted and returned light is proportional to the distance  to the object:

to the object:

- note that aliasing will occur for

m, but in practice the returned beam will be significantly dimmed due to attenuation at some distance anyway

m, but in practice the returned beam will be significantly dimmed due to attenuation at some distance anyway - the beam is “scanned” across the scene by a spinning mirror, typically returning multiple range measurements in an angular band around the sensor

- a laser is used here mainly because it is easier to form a tightly collimated (low-dispersion) beam, which does not fan out very much over distance

- the frequency stability of a laser can also allow narrow band-pass filtering of the returned light, to minimize interference from other light sources

Compass

- we are all familar with the operation of a simple 1-DoF compass

- when held so its sensing axis is approximately vertical, the pointer will align to the Earth’s magnetic field lines, which are approximately horizontal (except near the poles) and perpendicular to the equator

- miniature electronic compasses are now available which are based on the same Hall-effect sensing we studied before for shaft rotation

- here the Hall element is optimized to detect the relatively weak field of the Earth

- these sensors are easily upset by ferrous objects in the nearby environment; hence they usually work best only outdoors (the effect of ferrous parts of the robot itself are usually repatable and so can be calibrated out)

Inertial

- accelerometers measure linear acceleration

- up to three perpendicular axes of measurement are now commonly available in chip-scale MEMS devices

- can be due both to gravity and to motion

- sometimes the output is low pass filtered to try to remove the effects of motion, thus only measuring the direction of the gravity vector, i.e. “down”

- sometimes it is high pass filtered to try to remove the effects of gravity

- in theory, once gravity has been subtracted, can integrate once to get velocity and again to get position

- in practice, this will always result in drift that increases with time

- gyroscopes mesure angular velocity

- up to three perpendicular axes of measurement are now commonly available in chip-scale MEMS devices

- can be integrated once to get orientation, but again this is subject to drift

- often a 3 axis accelerometer and a 3 axis gyro are combined to make what is called a 6-DoF inertial measurement unit (IMU)

- in theory, this could allow relative pose estimation by integration (i.e. the translation and rotation of the IMU relative to its starting pose)

- in practice, the integrations will drift and quickly become useless

- some expensive IMUs are available with very low drift, that allow reliable relative pose estimation over periods of hours or more

- sometimes a 3 axis compass is also added to make a “9-DoF” IMU

- in practice with relatively inexpensive components, a 9-DoF IMU is often enough to recover 3D absolute orientation (i.e. rotational pose) with some reliability

- drift in orientation can be corrected if the compass data can be trusted

- in theory, the absolute orientation could then be used to subtract the gravity effect from the accelerometer data, and then a double integration could give a relative position, but this would still be subject to drift

Beacons and Landmarks

Image and Depth

- cameras typically measure the intensity, and possibly the color, of light simultaneously coming in on a multitude of regularly spaced rays, one per pixel

- a regular camera does not measure the distance to the objects from which the light rays have travelled

- however, now there are a number of depth cameras, or just depth sensors, which do return this information (they may or may not also return intensity or color; if not, they can be paired with a regular camera)

- 3D laser scanners, such as the Velodyne, are similar in concept to the 2D scanners discussed above, but use many beams in parallel to scan a 3D volume

- time-of-flight cameras such as the Swiss Ranger combine a precise flood flash with a special image sensor where every pixel acts like a miniature laser PSD

- stereo vision systems like the Bumblebee2 pair two regular cameras offset by a distance called the baseline; the objects appear at slightly different locations in each camera depending on distance

- structured light systems like the Kinect are surprisingly similar to stereo vision, but replace one camera with a projector that imposes a known light pattern onto the scene

- we’ll talk more about cameras and depth sensors later in the course

Motion Capture

- environment-mounted mocap systems require either sensors, emitters, or both to be fixed to the environment around a fixed capture volume; these include both passive marker systems like Vicon, and active marker systems like PhaseSpace

- relative mocap approaches attempt to recover motion trajectories only from sensors attached to the moving object; these include both inertial systems like the XSens MVN and camera-based approaches

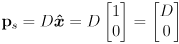

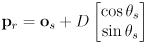

- the coordinate transformations we developed at the beginning of L3 are very useful for dealing with sensor data, especially when multiple position readings must be combined

- just as we defined the robot frame with parameters

relative to the world frame, we can also define further child frames of the robot frame for any sensors

relative to the world frame, we can also define further child frames of the robot frame for any sensors - for example, if a range sensor (e.g. an IR device) is located at a given position

on the robot (i.e.

on the robot (i.e.  is given in robot frame coordinates), and if it is facing in direction

is given in robot frame coordinates), and if it is facing in direction  in robot frame then the

in robot frame then the  homogenous transformation matrix

homogenous transformation matrix  can be used to transform a range point

can be used to transform a range point

in sensor frame to a point  in robot frame, where

in robot frame, where  is the range reading (distance from the sensor to the object)

is the range reading (distance from the sensor to the object) - actually, in this case, the math boils down to

because we have constructed the sensor frame so that range points are always along its  -axis

-axis - this could be useful if we have multiple range sensors at different poses on the robot, and want to transform all their data into a common coordinate frame

- it is also the first step to transforming the data to world frame, which can be useful when we want to consider readings taken at different robot poses

- yes, putting data from multiple robot poses into one coordinate frame this way will be affected by any errors in odometery

- but note that if we are interested only in properties of the data relative to the robot’s current pose (or to some prior pose), the total odometric error from the robot’s start is not necessarlily a factor; rather, if we’re careful, we only need to consider odometry error relative to that pose

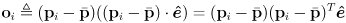

- often we really want to recognize features in the data that are more interesting than individual points

- line fitting is a good example: if the robot is looking at a wall, many of the data points may (approximately) fit a line

- there are a variety of ways to mathematically represent a line, and to extract the best fit line from a set of data points

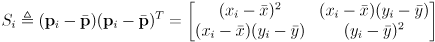

- we will study a handy technique based on principal component analysis (PCA), which is strongly related to the Eigendecomposition of a symmetric matrix called the scatter matrix, which is derived from the data

- one advantage of this approach is that it can be computed incrementally, i.e., with a constant amount of computation for each new data point

- start by transforming all

data points

data points  into world frame

into world frame - the fit line will pass through the centroid

- the rest of our job will be to find a unit tangent vector

that gives the line direction

that gives the line direction - if we were to re-center the data so that

was at the origin, then we could imagine spinning a “test vector”

was at the origin, then we could imagine spinning a “test vector”  around and taking its dot product with each data point considered as a vector:

around and taking its dot product with each data point considered as a vector:

each such dot product is a measure of the parallelism of the two vectors, which will peak when they are either pointing in the same or opposite directions; the peak will be the length of the original vector  (because

(because  )

) - if we then scale the original vector

by that dot product, we get a vector in the same direction as the original data point, with length ranging from 0 up to

by that dot product, we get a vector in the same direction as the original data point, with length ranging from 0 up to

- for a given setting of the test vector

, do this for all the data points, and call the average of the resulting

, do this for all the data points, and call the average of the resulting  the output vector

the output vector  corresponding to

corresponding to

- the key insight is that it can be shown that the tangent vector

can be defined as a test vector

can be defined as a test vector  which maximizes the length of

which maximizes the length of  ; i.e.

; i.e.  is should be the test vector that maximizes parallelism with all the vectors corresponding to the re-centered data points

is should be the test vector that maximizes parallelism with all the vectors corresponding to the re-centered data points- as long as the data has no rotational symmetries, there will be only two choices for

, and they will be anti-parallel (we will take either one)

, and they will be anti-parallel (we will take either one) - remarkably, it can also be shown that

is parallel to the corresponding

is parallel to the corresponding  , i.e. the transformation from the test vector to the maximal output vector only had a scaling, not a rotating effect

, i.e. the transformation from the test vector to the maximal output vector only had a scaling, not a rotating effect - this is the definition of an Eigenvector (and the scale factor is the corresponding Eigenvalue)

- to see how this helps, first observe that the above equation for

can be re-written with a matrix; the operation

can be re-written with a matrix; the operation  on two equal-length column vectors (here each is the same

on two equal-length column vectors (here each is the same  vector), is called an outer product, its result is a

vector), is called an outer product, its result is a  matrix

matrix

where we have used

,

,

- the average output vector

corresponding to a test vector

corresponding to a test vector  can be found by first averaging the

can be found by first averaging the  :

:

with

with

is the

is the  normalized scatter matrix for this dataset;

normalized scatter matrix for this dataset;  is the Eigenvector corresponding to its largest Eigenvalue

is the Eigenvector corresponding to its largest Eigenvalue- note that since the

were symmetric,

were symmetric,  will also be symmetric

will also be symmetric - label the elements of

like this:

like this:

- don’t we still have to find the

which maximizes the corresponding

which maximizes the corresponding  ?

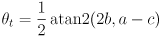

? - this may be a little surprising, and we will skip the derivation, but it can be shown that the pointing direction

of

of  can be calculated as

can be calculated as

- so

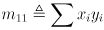

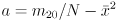

- a good way to do this incrementally (i.e. with a constant amount of computation for each new data point) is to rewrite the above formulas in terms of the moments of the data set:

,

,  ,

,  (first moments)

(first moments)

,

,  (second moments)

(second moments) - these can be kept as running totals of the datapoint coordinates, so updating them requires only a constant number of additions and multiplications

- then it is not hard to show that

,

,

,

,  ,

,

- which again require only a constant number of arithmetic operations to recalculate once the moments have been updated

- this matlab script demonstrates the approach

where

where  is the speed of sound in air

is the speed of sound in air be the distance from the sensor to an object in the environment

be the distance from the sensor to an object in the environment adjacent to the emitter at a fixed baseline distance

adjacent to the emitter at a fixed baseline distance

MHz, yeilding a wavelength of

MHz, yeilding a wavelength of m where

m where  m/s is the speed of light in air

m/s is the speed of light in air between the emitted and returned light is proportional to the distance

between the emitted and returned light is proportional to the distance  to the object:

to the object:

m, but in practice the returned beam will be significantly dimmed due to attenuation at some distance anyway

m, but in practice the returned beam will be significantly dimmed due to attenuation at some distance anyway